The ability to maintain a continuously operational environment is essential for ensuring business continuity and minimizing costly service interruptions. Achieving high availability is fundamental to any robust technology and infrastructure strategy, as it translates into efficient user experiences and the delivery of quality services.

In this article, we will explore the crucial role that redundancy plays in data centers as a strategy to strengthen high availability. We will also analyze how different redundancy models contribute to this goal and how to balance cost against reliability to achieve an optimal solution.

The importance of redundancy in data centers

Redundancy in data centers is a vital strategy that involves duplicating components or functions within a system to enhance its reliability. This practice acts as an insurance policy in terms of hardware and protocols, designed to maintain operational functionality even when parts of the infrastructure fail.

Designing a system with redundancy ensures that if one component fails, another can immediately take its place without interrupting service. According to an Uptime Institute survey on data center resiliency, 80% of data center managers and technicians have faced some form of interruption in the last three years, which can have severe repercussions. An example of this is the 2007 incident at Los Angeles International Airport, where a computer failure led to the disruption of international flights for nine hours, highlighting the cascading effects of system failures. Another example in the aviation sector is the 2017 British Airways outage in the United Kingdom, where a massive issue affected their data center, leading to the cancellation of hundreds of flights and impacting more than 75,000 passengers. The cause was attributed to a power supply failure and a problematic system recovery process, underscoring the importance of proper infrastructure management and redundancy in data centers.

The reliability of a data center is the silent guardian against data loss catastrophes, a buffer against downtime, and an efficiency catalyst for operations. Beyond data protection, redundancy ensures business continuity, fosters customer trust and satisfaction by providing an uninterrupted experience, and shields against potential financial losses due to operational outages.

Although the initial costs of implementing redundancy measures may seem high, it’s vital to shift the perspective: investing in redundancy is not just an expense, but a safeguard. It is a proactive measure to prevent the potentially devastating consequences of system failures, which can cost far more in the long run than the initial investment in redundant systems.

Key components requiring redundancy

To ensure high availability, several data center components must have redundancy:

- Computing nodes or servers: Redundancy in these computing nodes or dedicated servers allows for a smooth transition to backup servers without service interruption in the event of failures. While this aspect depends on each client and project, whenever it is necessary to have an RTO and RPO close to or equal to zero, it will be necessary to have multiple nodes, even distributed across several data centers.

- Redundant storage systems: These ensure that there is no single point of failure within the data storage infrastructure, protecting against data loss and allowing for rapid recovery and continuity.

- Redundant cooling systems: These are essential given the heat generated by high-power computing. Systems like CRAC (Computer Room Air Conditioning) in data centers prevent overheating and ensure optimal performance.

- Network redundancy: This minimizes the risk of connectivity loss due to a single point of failure in the network. Stackscale exemplifies this with its geographically dispersed data centers featuring redundant network connections.

- Redundant power supplies: Multiple UPS units and redundant battery rooms, such as those in Stackscale, ensure that even in the face of power supply interruptions, the data center continues to operate smoothly.

By weaving a network of redundancy across servers (nodes), storage, power, cooling, and network systems, data centers like those operated by Stackscale are ideal for ensuring high availability. This multi-layered approach adds an extra layer of protection and ensures continuous service with maximum uptime, making it ideal for all types of mission-critical solutions.

The role of redundancy in enhancing high availability

High availability refers to a system’s ability to remain operational and accessible, minimizing the possibility of downtime that could disrupt business operations. It is a key performance metric for any data center, reflecting its reliability and efficiency.

The relationship between redundancy and high availability is one of mutual reinforcement. Redundancy acts as a safety mechanism, where if one component fails, another immediately replaces it, mitigating risks and improving fault tolerance.

For example, in a system with network redundancy, if a network link fails, data flow is instantly redirected through alternative routes, maintaining connectivity. Without redundancy, a single point of failure could lead to significant downtime, disrupting services and causing financial and reputational damage.

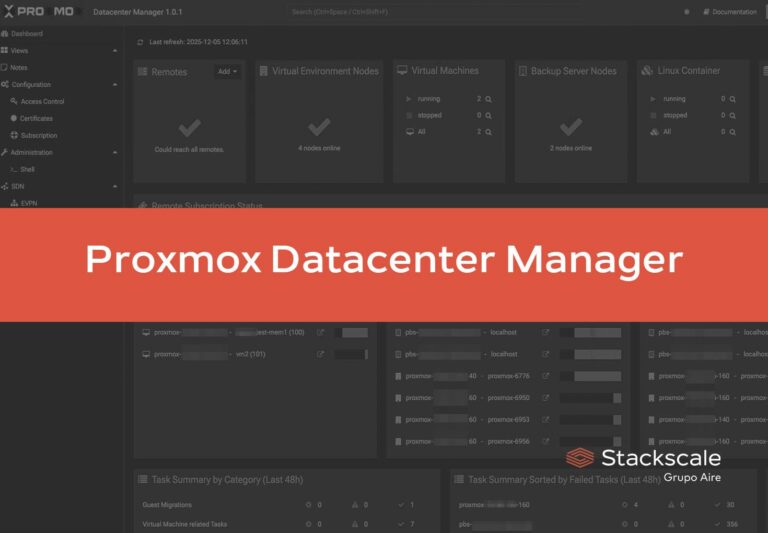

Stackscale’s infrastructure solutions offer high availability. The company’s infrastructure is designed to minimize downtime through a robust and redundant environment that ensures operational continuity even when individual components fail.

Redundancy models: N+1, 2N, and 2N+1

Redundancy models in data center design dictate the reliability and robustness of the infrastructure. Understanding the technical details of these models is crucial for tailoring the data center design to a business’s operational requirements and risk tolerance.

- N+1: This provides a straightforward approach to redundancy, where “N” represents the number of components required to operate the system under normal conditions, and the “+1” indicates an additional backup component.

- 2N: This involves having a complete set of duplicated components for each one required, ensuring that even multiple simultaneous failures can be handled without issues.

- 2N+1: This model goes a step beyond the 2N model by adding an extra layer of protection, ideal for systems where downtime is extremely costly or dangerous.

The relationship between redundancy levels and data center tiers

Redundancy levels in data centers are closely related to data center tiers, which are typically classified from Tier 1 to Tier 4, depending on their availability and performance. These levels define the redundancy features and uptime that can be expected from a data center.

Stackscale stands out for its infrastructure designed with full redundancy to provide a high-quality infrastructure experience, backed by a guaranteed uptime of 99.9%.

In summary, implementing an optimal redundancy strategy is essential for ensuring high availability and fault tolerance in data centers. Stackscale positions itself as an ideal partner in the quest for a reliable and redundant infrastructure solution, offering resilient infrastructure and premium services that ensure operational continuity and business growth.